Video-based Side-Channel Attack in Wearable(VR) Devices

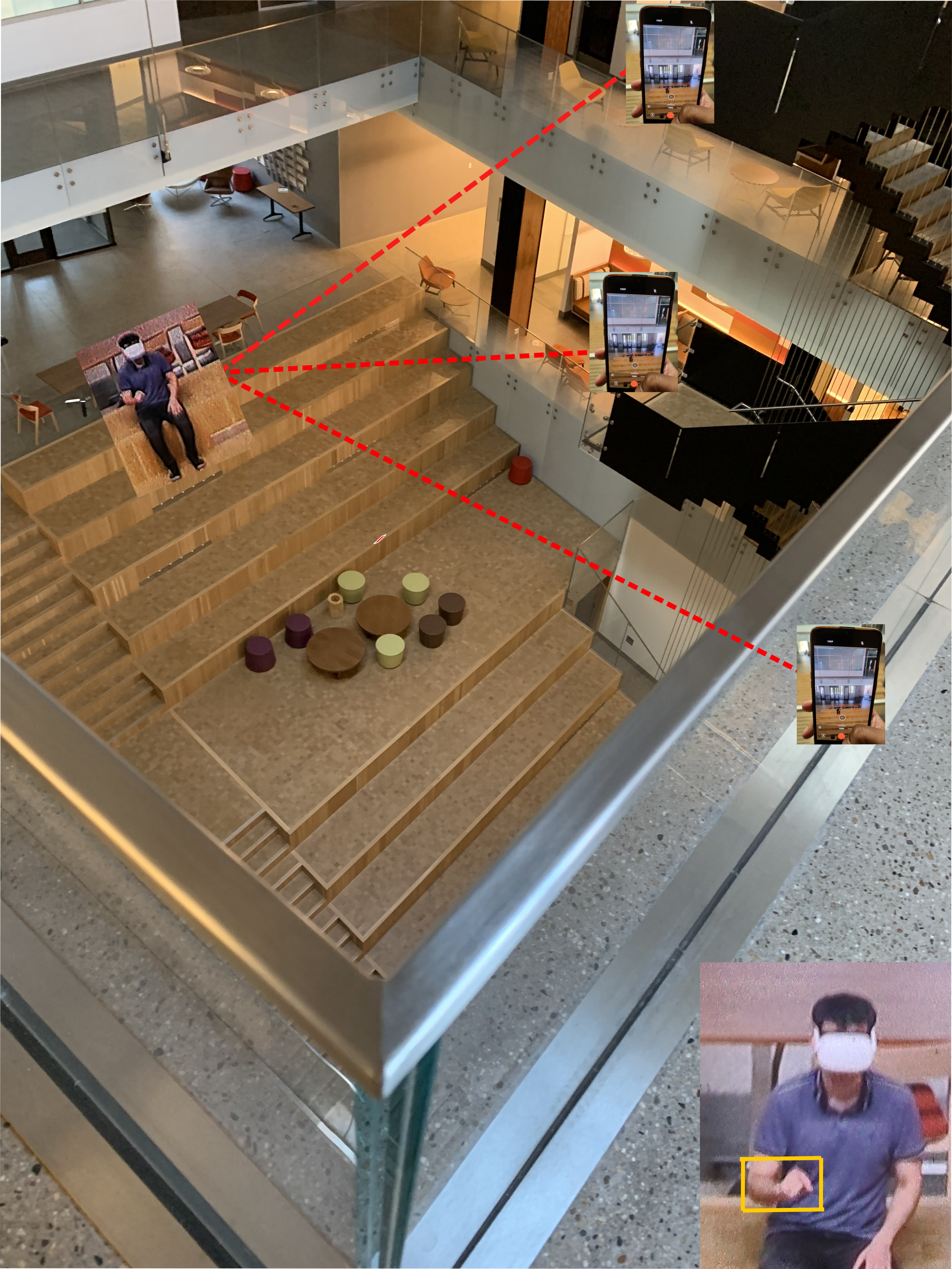

Attacker's view of a target user's typing from a distance.

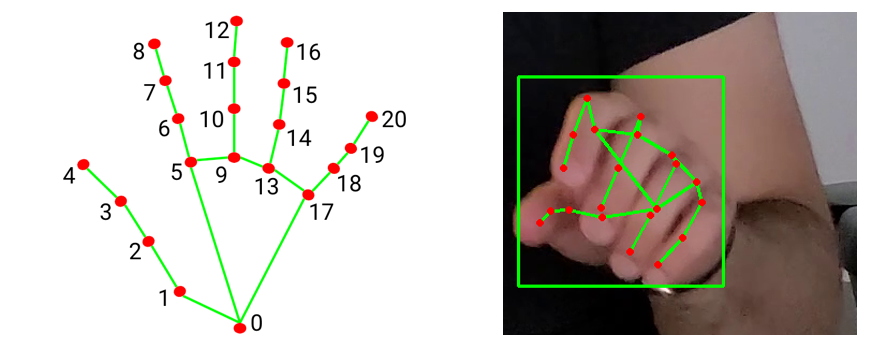

Landmark points detected on the user's hand while they type.

Project Overview

A video-based side-channel attack, Hidden Reality (HR), shows although the virtual screen in VR devices is not in direct sight of adversaries, the indirect observations such as hand gestures might get exploited to steal the user’s private information. The Hidden Reality model can successfully decipher an average of over 75% of the text inputs.

Hidden Reality Model

The steps involved in the implementation of the Hidden Reality attack model for various attack scenarios follow.

- Video Preprocessing

- Localization and Hand Landmark Tracking

- Click Detection

- Character Inference

- Word Prediction

Datasets

With the approval of the university's IRB, videos of registered volunteer participants were captured while they were inputting the text on their virtual screen of the Meta Quest 2. During this experiment, a large corpus of 368 short video clips was recorded during the following attack scenarios - Password entry, Pin entry, Graphical-lock pattern entry, Text entry, and Email entry.